Find and Fix Index Coverage Errors in Google Search Console

Google Search Console's Index Coverage report provides feedback on your site’s crawling and indexing process.

The reported issues break down in four statuses:

- Valid

- Valid with warnings

- Error

- Excluded

Each status consists of different issue types that zoom in on specific issues Google has found on your site.

As you know, Google Search ConsoleGoogle Search Console

The Google Search Console is a free web analysis tool offered by Google.

Learn more is an essential part of every SEO’s toolbox.

Among other things, Google Search Console reports on your organic performance and how they fared when crawling and indexing your site. The latter topic is covered in their 'Index Coverage report', which this article is all about.

After reading this article, you’ll have a firm understanding of how to leverage the Index Coverage report to improve your SEO performance.

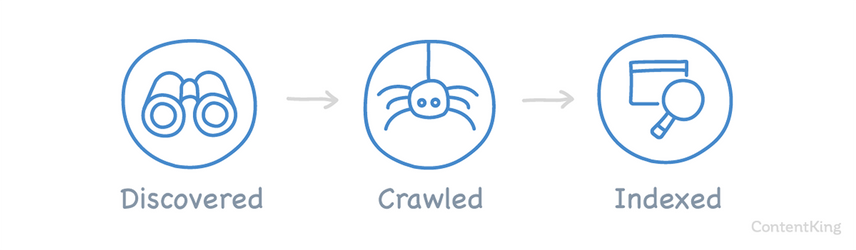

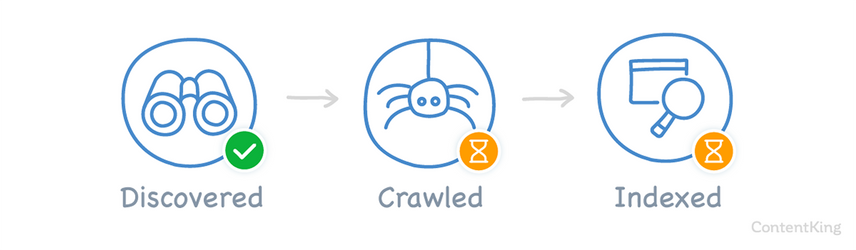

Before we dig in, here’s a brief primer on discovering, crawling, indexing, and ranking:

- Discovering: in order to crawl a URL, search engines first need to discover it. There are various ways they can do so, such as: following links from other pages (both on-site and off-site) and processing XML sitemaps. URLs that have been discovered are then queued for crawling.

- Crawling: during the crawling phase, search engines request URLs and gather information from them. After an URL is received, it’s handed over to the Indexer, which handles the Indexing process.

- Indexing: during indexing, search engines try to make sense of the information produced by the crawling phase. To put it somewhat simply, during indexing a URL’s authority and relevance for queries is determined.

When URLs are indexed, they can appear in search engineSearch Engine

A search engine is a website through which users can search internet content.

Learn more result pages (SERPs).

Let this all sink in for a minute.

This means your pages can only appear in the SERPs if your pages made it successfully through the last phase — indexing.

What is the Google Search Console Index Coverage report?

When Google is crawling and indexing your site, they keep track of the results and report them in Google Search Console’s Index Coverage report .

It’s basically feedback on the more technical details of your site’s crawling and indexing process. In case they detect pressing issue, they send notifications. These notifications are usually delayed though, so don't solely rely on these notifications to learn about high-impact SEO issues.

Google's feedback is categorized in four statuses:

- Valid

- Valid with warnings

- Excluded

- Error

When should you use the Index Coverage report?

Google says that if your site has fewer than 500 pages, you probably don’t need to use the Index Coverage report. For sites like this, they recommend using their site: operator.

We strongly disagree with this.

If organic traffic from Google is essential to your business, you do need to use their Index Coverage report, because it provides detailed information and is much more reliable than using their site: operator to debug indexing issues.

The Index Coverage report explained

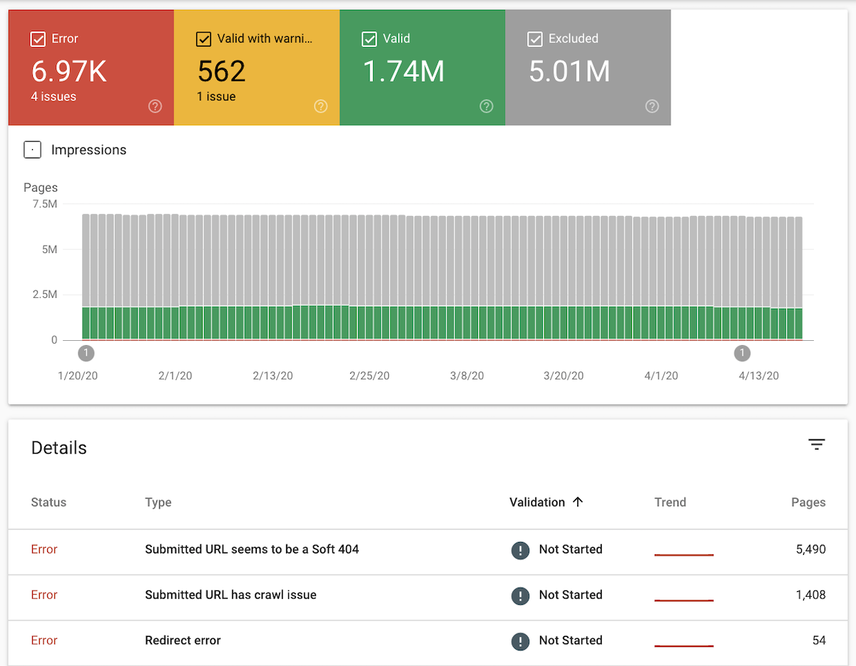

The screenshot above is from a fairly large site with lots of interesting technical challenges.

Find your own Index Coverage report by following these steps:

- Log on to Google Search Console.

- Choose a property.

- Click

CoverageunderIndexin the left navigation.

The Index Coverage report distinguishes among four status categories:

- Valid: pages that have been indexed.

- Valid with warnings: pages that have been indexed, but which contain some issues you may want to look at.

- Excluded: pages that weren’t indexed because search engines picked up clear signals they shouldn’t index them.

- Error: pages that couldn’t be indexed for some reason.

Each status consists of one or more types. Below, we’ll explain what each type means, whether action is required, and if so, what to do.

The Index Coverage report gives you a fantastic overview and understanding of how Google views your website. One piece of general housekeeping that gets missed often is reviewing the valid pages within Search Console. Doing so allows you to identify if any pages are currently being indexed that shouldn't be.

We often see a lot of parameter based pages being indexed alongside the canonical versions of these pages, which can lead to duplication issues and an unoptimized use of crawls. When identifying these pages, you can then use the URL Parameter tool within Search Console to tell Google how to treat these pages and, if needed, block them from being crawled, giving you a much better overview of your website and Google's crawling behavior moving forward.

Valid URLs

As mentioned above, ”valid URLs” are pages that have been indexed. The following two types fall within the “Valid” status:

- Submitted and indexed

- Indexed, not submitted in sitemap

Submitted and indexed

These URLs were submitted through an XML sitemap and subsequently indexed.

Action required: none.

Indexed, not submitted in sitemap

These URLs were not submitted through an XML sitemap, but Google found and indexed them anyway.

Action required: verify if these URLs need to be indexed, and if so add them to your XML sitemap. If not, make sure you implement the robots noindex directive and optionally exclude them in your robots.txt if they can cause crawl budget issues.

If you have an XML sitemap, but you simply haven’t submitted it to Google Search Console, all URLs will be reported with the type: “Indexed, not submitted in sitemap” – which is a bit confusing.

It makes sense to split the XML sitemap into smaller ones for large sites (say 10,000+ pages), as this helps you quickly gain insight in any indexability issues per section or content type.

Adding XML sitemaps per folder or partial subsets provides more granularity to your data.

Keep in mind that most Google Search Console reports are limited in the number of errors / suggestions Google gives back, so having more XML sitemaps results in more options to get detailed data.

The index coverage report is one of the best parts of the new(ish) Google Search Console. For clients with an up-to-date XML sitemap, the "indexed, not submitted" issue in sitemap report can unearth some interesting insight. Which URLs is Google indexing that they shouldn’t be and what are the patterns? Using the filter inside the report, you can segment common URL patterns and check the volume of impacted URLs at the bottom of the report.

For highly impacted areas, you can then explore the "Excluded" status report to get the root cause for why URLs are being indexed. For example, did Google opt for a different canonical or are redirects causing indexing issues?

Valid URLs with warnings

The “Valid with warnings” status only contains two types:

- “Indexed, though blocked by robots.txt”

- "Indexed without content"

Indexed, though blocked by robots.txt

Google has indexed these URLs, but they were blocked by your robots.txt file. Normally, Google wouldn’t have indexed these URLs, but apparently they found links to these URLs and thus went ahead and indexed them anyway. It’s likely that the snippets that are shown are suboptimal.

Please note that this overview also contains URLs that were submitted through XML sitemaps since January 2021 .

Action required: review these URLs, update your robots.txt, and possibly apply robots noindex directives.

Indexed without content

Google has indexed these URLs, but Google couldn't find any content on them. Possible reasons for this could be:

- Cloaking

- Google couldn't render the page, because they were blocked and received a HTTP status code 403 for example.

- The content is in a format Google doesn't index

- An empty page was published.

Action required: review these URLs to double-check whether they really don't contain content. Use both your browser, and Google Search Console's URL Inspection Tool to determine what Google sees when requesting these URLs. If everything looks fine, just request reindexing.

Excluded URLs

The “Excluded” status contains the following types:

- Alternate page with proper canonical tag

- Blocked by page removal tool

- Blocked by robots.txt

- Blocked due to access forbidden (403)

- Blocked due to other 4xx issue

- Blocked due to unauthorized request (401)

- Crawl anomaly

- Crawled - currently not indexed

- Discovered - currently not indexed

- Duplicate without user-selected canonical

- Duplicate, Google chose different canonical than user

- Duplicate, submitted URL not selected as canonical

- Excluded by ‘noindex’ tag

- Not found (404)

- Page removed because of legal complaint

- Page with redirect

- Soft 404

The "Excluded" section of the Coverage report has quickly become a key data source when doing SEO audits to identify and prioritize pages with technical and content configuration issues. Here are some examples:

- To identify URLs that have crawling and indexing problems that are not always found in your own crawling simulations, which are particularly useful when you don't have access to web logs to validate.

- To help prioritize your technical and content optimization efforts by putting into context what issues are affecting more pages as seen by Google directly, the percentage of pages that are wasting your site's crawl budget, those featuring poor content that are not found worthy to be indexed, those triggering errors that are also hurting the user experience, etc.

- To confirm in which content duplication scenarios you might think you have fixed by using canonical tags, Google has overlooked their configuration because these pages are still being referred by others with mixed signals and configurations that you might have overlooked and can now tackle.

- To identify if your pages are suffering from Soft 404 issues.

Alternate page with proper canonical tag

These URLs are duplicates of other URLs, and are properly canonicalized to the preferred version of the URL.

Action required: if these pages shouldn't be canonicalized, change the canonical to make it self-referencing. Additionally, keep an eye on the amount of pages listed here. If you're seeing a big increase while your site hasn't increased that much in indexable pages, you could potentially be dealing with poor internal link structure and/or crawl budget issue.

Blocked by page removal tool

These URLs are currently not shown in Google’s search results because of a URL removal request. When URLs are hidden in this way, they are hidden from Google’s search results for 90 days. After that period, Google may bring these URLs back up to the surface.

The URL removal request feature should only be used as a quick, temporary measure to hide URLs. We always recommend taking additional measures to truly prevent these URLs from popping up again.

Action required: send Google a clear signal that they shouldn’t index these URLs via the robots noindex directive and make sure that these URLs are recrawled before the 90 days expire.

Blocked by robots.txt

These URLs are blocked because of the site’s robots.txt file and are not indexed by Google. This means Google has not found signals strong enough to warrant indexing these URLs. If they had, the URLs would be listed under “Indexed, though blocked by robots.txt”.

Action required: make sure there aren’t any important URLs among the ones listed in this overview.

Blocked due to access forbidden (403)

Google wasn't allowed to access these URLs and received a 403 HTTP response code.

Action required: make sure that Google (and other search engines) have unrestricted access to URLs you want to rank with. If URLs that you don't want to rank with are listed under this issue type, then it's best to just apply the noindex directive (either in the HTML source or HTTP header).

Blocked due to other 4xx issue

Google couldn't access these URLs because they received 4xx response codes other than the 401, 403 and 404. This can happen with malformed URLs for example, these sometimes return the 400 response code.

Action required: try fetching these URLs using the URL inspection tool to see if you can replicate this behavior. If these URLs are important to you, investigate what’s going on, fix the issue and add the URLs to your XML sitemap. If you don't want to rank with these URLs, then just make sure you remove any references to them.

Blocked due to unauthorized request (401)

These URLs are inaccessible to Google because upon requesting them, Google received a 401 HTTP response, meaning they weren’t authorized to access the URLs. You’ll typically see this for staging environments, which are made inaccessible to the world using HTTP Authentication.

Action required: make sure there aren’t any important URLs among the ones listed in this overview. If there are, you need to investigate why, because that would be a serious SEO issue. If your staging environment is listed, investigate how Google found it, and remove any references to it. Remember, both internal and external links can be the cause of this. If search engines can find those, it's likely visitors can as well.

Crawl anomaly

🛎️ Crawl anomaly type has been retired

With January 2021's Index Coverage update , the crawl anomaly issue type has been retired. Instead, you'll now find the more specific issue types:

These URLs weren’t indexed because Google encountered a “crawl anomaly” when requesting them. Crawl anomalies can mean they received response codes in the 4xx and 5xx range that aren’t listed with their own types in the Index Coverage report.

Action required: try fetching some URLs using the URL inspection tool to see if you can replicate the issue. If you can, investigate what’s going on. If you can’t find any issues and everything works fine, keep an eye on it, as it can just be a temporary issue.

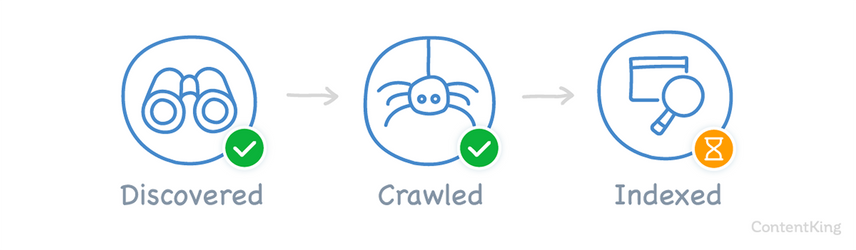

Crawled - currently not indexed

These URLs were crawled by Google, but haven’t been indexed (yet). Possible reasons why a URL may have this type:

- The URL was recently crawled, and is still due to be indexed.

- Google knows about the URL, but hasn’t found it important enough to index it. For instance because it has few to no internal linksInternal links

Hyperlinks that link to subpages within a domain are described as "internal links". With internal links the linking power of the homepage can be better distributed across directories. Also, search engines and users can find content more easily.

Learn more, duplicate contentDuplicate Content

Duplicate content refers to several websites with the same or very similar content.

Learn more or thin content.

Action required: make sure there aren’t important URLs among the ones in this overview. If you do find important URLs, check when they were crawled. If it’s very recent, and you know this URL has enough internal links to be indexed, it’s likely that will happen soon.

"Crawled - currently not indexed" is one report in Search Console that has the potential to be the most actionable.

Unfortunately, it also requires you to play detective because Google won't actually tell you why the URL isn't indexed. Reasons could include: thin content, low quality, duplication, pagination, redirection, or Google only recently discovered the page and will index it soon.

If you find the page is actually important and should be indexed, this is your opportunity to take action.

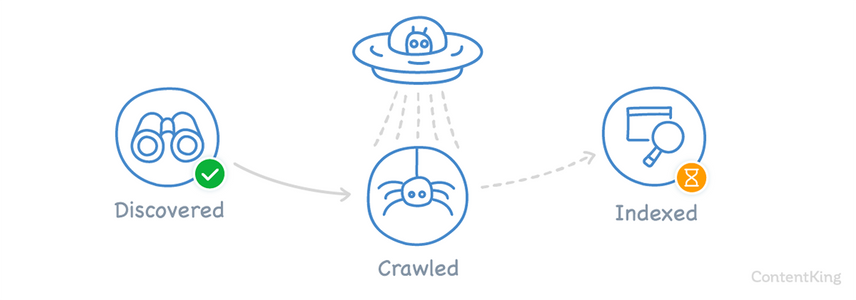

Discovered - currently not indexed

These URLs were found by Google but haven’t been crawled—and therefore indexed—yet. Google knows about them, and they’re queued for crawling. This can be because Google has requested these URLs and wasn’t successful because the site was overloaded, or because they simply didn’t get to crawling them yet.

Action required: keep an eye on this. If the number of URLs increases, you might be having crawl budget issues: your site is demanding more attention than Google wants to spend on it. This can be because your site doesn’t have enough authority or is too slow or often unavailable.

This URL state is part of the natural process to some extent, and keep in mind that this report can lag a little behind the actual state.

Always first confirm with the URL inspection tool what the actual state is, and in case a huge amount of important pages hang around in here: take a careful look at what google is crawling (your logfiles are your friend!).

Check if Google’s getting bogged down in low-value crawl traps, like combinations of filters, or things like calendars of events that create near infinite groups of URLs.

Duplicate without user-selected canonical

These URLs are duplicates according to Google. They aren’t canonicalized to the preferred version of the URL, and Google thinks these URLs aren’t the preferred versions. Therefore, they’ve decided to exclude these URLs from their index.

Oftentimes, you’ll find PDF files that are 100% duplicates of other PDFs among these URLs.

Action required: add canonical URLs to the preferred versions of the URLs such as for example a product detail page. If these URLs shouldn’t be indexed at all, make sure to apply the noindex directive through the meta robots tag or X-Robots-Tag HTTP Header. When you’re using the URL Inspection tool, Google may even show you the canonical version of the URL.

If you see a significant number of URLs falling into the "duplicate without user-selected canonical" issue category, this can often illuminate a sitewide issue, such as a misplaced canonical tag, a broken

<head>, or the canonical being unintentionally modified or removed by JavaScript.

Duplicate, Google chose different canonical than user

Google found these URLs on its own and considers them duplicates. Even though you canonicalized them to your preferred URL, Google chooses to ignore that and apply a different canonical.

You’ll often find that Google selects different canonicals on multi-language sites with highly similar pages and thin content.

Action required: Use the URL inspection tool to learn which URL Google has selected as the preferred URL and see if that makes more sense. For instance, it’s possible Google has selected a different canonical because it has more links and/or more content.

It's Google’s choice to pick one version of a page and save on indexing, but as an SEO, it’s not great that Google ignores your choice.

This can happen when a website has similar content with a small localisation to different markets, or pages that are duplicated across a website. Remember, the hreflang attribute is a hint as well, so it won’t necessarily solve your problem. Google may still serve the right URL, however, it shows the title and description of their selected version.

Unfortunately, GSC doesn’t tell us how to fix it, but at least you know something is wrong and can see how big the problem is. Some possible solutions are to create unique content (if your hreflang is not enough) or to noindex the copies of these pages.

Duplicate, submitted URL not selected as canonical

You’ve submitted these URLs through an XML sitemap, but they don’t have a canonical URL set. Google considers these URLs duplicates of other URLs, and has therefore chosen to canonicalize these URLs with Google-selected canonical URLs.

Please note that this type is very similar to type Duplicate, Google chose different canonical than user, but is different in two ways:

- You explicitly asked for Google to index these pages.

- You haven’t defined canonical URLs.

Action required: add proper canonical URLs that point to the preferred version of the URL.

When performing website migrations, it's a common best practice to keep the XML sitemap that contains the old URLs available to speed up the migration process. These old URLs will be listed under Duplicate, submitted URL not selected as canonical as long as they are included in the XML sitemap. After removing them from the XML sitemap, the URLs will move to the Page with redirect status.

Excluded by ‘noindex’ tag

These URLs haven’t been indexed by Google because of the noindex directive (either in the HTML source or HTTP header).

Action required: make sure there aren’t important URLs among the ones listed in this overview. If you do find important URLs, remove the noindex directive, and use the URL Inspection tool to request indexing. Double-check whether there are any internal links pointing to these pages, as you not want these noindex'ed pages to be publicly available.

Please note that, if you want to make pages inaccessible, the best way to go about that is to implement HTTP authentication.

When checking the

Excluded by ‘noindex’ tagsection, it's not only important to ensure that important pages aren't included but also that low quality pages are included.If you know that your site generates a large amount of content that should have the "noindex" tag, check to ensure it's included in this report.

Not found (404)

These URLs weren’t included in an XML sitemap, but Google found them somehow and can’t index them because they returned a HTTP status code 404. It’s possible Google found these URLs through other sites, or that these URLs existed in the past.

Action required: make sure there aren’t important URLs among the ones listed in this overview. If you do find important URLs, restore the contents on these URLs or 301 redirect the URL to the most relevant alternative. If you don't redirect to a highly relevant alternative, this URL is likely to be seen as a soft 404.

Page removed because of legal complaint

These URLs were removed from Google’s index because of a legal complaint.

Action required: make sure that you’re aware of every URL that’s listed in this overview, as someone with malintent may have requested your URLs to be removed from Google’s index.

Page with redirect

These URLs are redirecting, and are therefore not indexed by Google.

Action required: none.

When you’re involved in a website migration this overview of redirecting pages does come in handy when creating a redirect plan.

Soft 404

These URLs are considered soft 404 responses, meaning that the URLs don’t return a HTTP status code 404 but the content gives the impression that it is in fact a 404 page, for instance by showing “Page can’t be found” message. Alternatively, these errors can be the result of redirects pointing to pages that are considered not relevant enough by Google. Take for example a product detail page that's been redirected to its category pages, or even to the home page.

Action required: if these URLs are real 404s, make sure they return a proper 404 HTTP status code. If they’re not 404s at all, then make sure the content reflects that.

On e-commerce sites I often see soft 404 errors. Most of the time it’s benign, but it could also point to an issue with how Google is valuing your pages. Always take a look, and see if your content makes sense, or if you've redirected this URL whether it's a relevant URL.

Error URLs

The “Error” status contains the following types:

- Redirect error

- Server error (5xx)

- Submitted URL blocked by robots.txt

- Submitted URL blocked due to other 4xx issue

- Submitted URL has crawl issue

- Submitted URL marked ‘noindex’

- Submitted URL not found (404)

- Submitted URL seems to be a Soft 404

- Submitted URL returned 403

- Submitted URL returns unauthorized request (401)

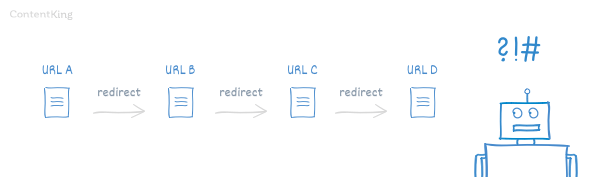

Redirect error

These redirected URLs can’t be crawled because Google encountered redirect errors. Here are some examples of potential issues Google may have run into:

- Redirect loops

- Redirect chains that are too long (Google follows five redirects per crawl attempt)

- Redirect to a URL that’s too long

Action required: investigate what’s going on with these redirects and fix them. Here’s how to easily check your HTTP status codes so you can start debugging them.

Server error (5xx)

These URLs returned a 5xx error to Google, stopping Google from crawling this page.

Action required: investigate why the URL returned a 5xx error, and fix it. Oftentimes, you see that these 5xx errors are only temporary because the server was too busy. Keep in mind that the user-agent making the requests can influence what HTTP status code is returned, so make sure to use Googlebot’s user-agent.

Make sure to check your log files and rate limiting setup. Using software to block scrapers or malicious users can result in search engine bots getting blocked too. Usually requests are blocked before the request reaches the server where log files are collected so don't forget to check both sources to identify possible problems.

Submitted URL blocked by robots.txt

You submitted these URLs through an XML sitemap, but they weren't indexed because Google’s blocked through the robots.txt file. This type is highly similar to two other types we’ve already covered above.

Here’s how this one is different:

- If the URLs would have been indexed, they would have been listed under “Indexed, though blocked by robots.txt”.

- If the URLs are indexed and not submitted through an XML sitemap, they’d be listed under type "Blocked by robots.txt".

These are subtle differences, but a big help when it comes to debugging issues like these.

Action required:

- If there are important URLs listed, make sure you prevent them from being blocked through the robots.txt file. Find the robots.txt directive by selecting a URL, and then clicking the

TEST ROBOTS.TXT BLOCKINGbutton on the right hand side. - URLs that shouldn’t be accessible to Google, should be removed from the XML sitemap.

The ‘Submitted URL blocked by robots.txt’ feature is immensely helpful to find out where we messed up (and gives us a chance to fix it quickly!). This is one of the first things to check after a site relaunch or migration has taken place.

Large aggregator or e-commerce sites tend to leave important directories disallowed within their robots.txt file post-production launch. This section is also helpful to point out outdated XML sitemaps that aren’t being updated as frequently as they should.

Submitted URL blocked due to other 4xx issue

You submitted these URLs through an XML sitemap, but Google received 4xx response codes other than the 401, 403 and 404.

Action required: try fetching these URLs using the URL inspection tool to see if you can replicate the issue. If you can, investigate what’s going on and fix it. If these URLs aren't working properly, and shouldn't be indexed then remove them from the XML sitemap.

Submitted URL has crawl issue

You submitted these URLs through an XML sitemap, but Google encountered crawl issues. This “Submitted URL has crawl issue” type is the “catch all” for crawl issues that don’t fit in any of the other types.

Oftentimes, these crawl issues are temporary in nature and will receive a “regular” classification (such as for example “Not found (404)") upon re-checking them.

Action required: try fetching some URLs using the URL inspection tool to see if you can replicate the issue. If you can, investigate what’s going on. If you can’t find any issues and everything works fine, keep an eye on it, as it can just be a temporary issue.

Submitted URL marked ‘noindex’

You submitted these URLs through an XML sitemap, but they’ve got the noindex directive (either in the HTML source or HTTP header).

Action required:

- If there are important URLs listed, make sure to remove the noindex directive.

- URLs that shouldn’t be indexed should be removed from the XML sitemap.

A noindex robots directive is one signal of many to indicate whether a URL should be indexed or not. Canonicals, internal links, redirects, hreflang, sitemaps etc. all contribute to the interpretation. Google doesn't override directives for the fun of it, ultimately it is trying to help!

Where contradictory signals exist, such as canonical and noindex being present on the same page, Google will need to choose which hint to take. In general, Google will tend to choose the canonical over noindex.

Submitted URL not found (404)

You submitted these URLs through an XML sitemap, but it appears the URLs don’t exist.

This type is highly similar to the “Not found (404)” type we covered earlier, the only difference being that in this case, you submitted the URLs through the XML sitemap.

Action required:

- If you find important URLs listed, restore their contents or 301 redirect the URL to the most relevant alternative.

- Otherwise, remove these URLs from the XML sitemap.

Submitted URL seems to be a Soft 404

You submitted these URLs through an XML sitemap, but Google considers them “soft 404s”. These URLs may be returning a HTTP status code 200, while in fact displaying a 404 page, or the content on the page gives the impression that it’s a 404.

This type is highly similar to the Soft 404 type we covered earlier, the only difference being that in this case you submitted these URLs through the XML sitemap.

Action required:

- If these URLs are real 404s, make sure they return a proper 404 HTTP status code and are removed from the XML sitemap.

- If they’re not 404s at all, then make sure the content reflects that.

Submitted URL returned 403

You submitted these URLs through an XML sitemap, but Google wasn't allowed to access these URLs and received a 403 HTTP response.

This type is highly similar to the one below, the only difference being that in the case of a 401 HTTP response login credentials were expected to be entered.

Action required: if these URLs should be available to the public, provide unrestricted access. Otherwise, remove these URLs from the XML sitemap.

Submitted URL returns unauthorized request (401)

You submitted these URLs through an XML sitemap, but Google received a 401 HTTP response, meaning they weren’t authorized to access the URLs.

This is typically seen for staging environments which are inaccessible to the world by using HTTP Authentication.

This type is highly similar to the “Blocked due to unauthorized request (401)” type we covered earlier, the only difference being that in this case you submitted these URLs through the XML sitemap.

Action required: investigate whether the 401 HTTP status code was returned correctly. If that’s the case, then remove these URLs from the XML sitemap. If not, then allow Google access to these URLs.

Frequently asked questions about the Index Coverage report

What information does the Index Coverage report contain?

The Index Coverage report provides feedback from Google on how they fared when crawling and indexing your website. It contains valuable information that helps you improve your SEO performance.

When should you use the Index Coverage report?

While Google says that the Index Coverage report is only useful for sites with more than 500 pages, we recommend that anyone who heavily relies on organic traffic uses it. It provides so much detailed information and is much more reliable than using their site: operator to debug indexing issues, you don't want to miss out on this.

How often should I check the Index Coverage report?

That depends on what you’ve got going on at your website. If it’s a simple website with a few hundred pages, you may want to check it once a month. If you’ve got millions of pages and add thousands of pages on a weekly basis, we’d recommend checking the most important issue types once a week.

Why are so many of my pages listed with the “Excluded” status?

There are various reasons for this, but we often see that the majority of these URLs are canonicalized URLs, redirecting URLs and URLs that are blocked through the site’s robots.txt.

Especially for large sites, that adds up quickly.

- "Indexed, though blocked by robots.txt": what does it mean and how to fix?

- Submitted URL seems to be a Soft 404: what does it mean and how to fix?

- Submitted URL has crawl issue: what does it mean and how to fix?

- Duplicate without user-selected canonical: what does it mean and how to fix?

- Crawled - currently not indexed: what does it mean and how to fix it?

- Discovered - currently not indexed: what does it mean and how to fix?

- Indexed, not submitted in sitemap: what does it mean and how to fix?

- Submitted URL marked ‘noindex’: what does it mean and how to fix?

- Crawl anomaly: what does it mean and how to fix?

- Alternate page with proper canonical tag: what does it mean and how to fix it?

- New Index coverage issue detected for site: how to fix?

- Submitted URL not found (404): what does it mean and how to fix it?

- Duplicate, submitted URL not selected as canonical: what does it mean and how to fix it?

- Duplicate, Google chose different canonical than user: what does it mean and how to fix it?

![Aleyda Solís, International SEO Consultant & Founder, [object Object]](https://cdn.sanity.io/images/tkl0o0xu/production/73d8baa7c1d1c3d49c44a650e65fa4bdd1383cb3-1765x1765.png?fit=min&w=100&h=100&dpr=1&q=95)

![Lily Ray, Sr. Director of SEO & Head of Organic Research, [object Object]](https://cdn.sanity.io/images/tkl0o0xu/production/0d9e4ea698af1b14676e83dd11ebee1e89d46be1-800x800.png?fit=min&w=100&h=100&dpr=1&q=95)